LEARNING WITH STUDENT TEACHER NETWORK MODELS

The animal brain is undoubtedly one of the least understood biological structures in the known universe. The role of single neurons in information processing is very well studied mainly due to the relative ease with which their electrical activity may be recorded

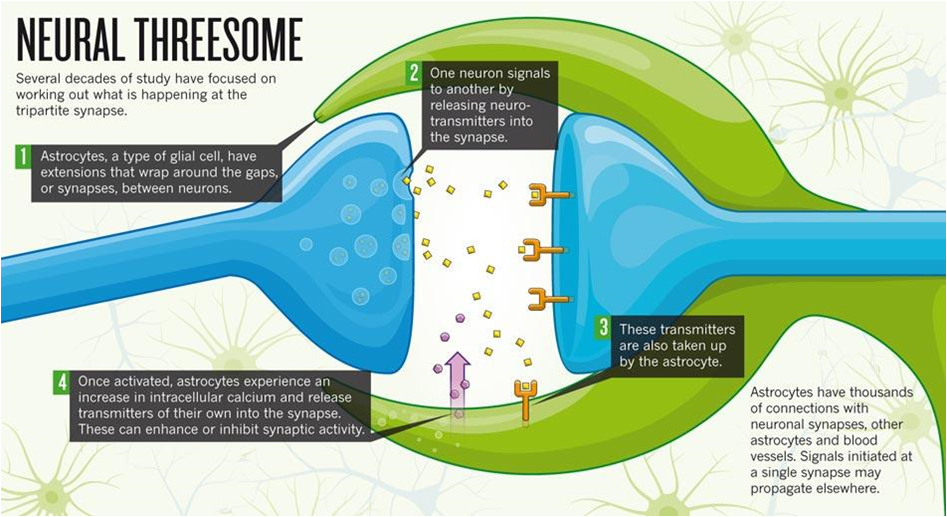

through electrodes inserted into the brain. However, the brain is composed of many other cells and all their functions are not thoroughly studied. The human brain, for instance has a 1:1 count of neurons and 'glial cells' (Glia - Greek for 'glue'). Astrocytes, a particular type of glial cells are known to comprise

about 20-40% of all glial cells in the human brain. They are "electrically silent" or passive and for a very long time were thought to only help with the neuronal nourishment and structural support. However, very recently when Calcium Imaging first started finding application in analysing neuronal activity, astrocytes caught

the attention of researchers. Astrocytes were seen to communicate through Calcium waves with networks of neurons, and also with other astrocytes forming a network amongst themselves. Further studies have shown that a single astrocyte is capable of sensing activity at tens of thousands of synapses (connection between neurons) through

tight associations with the presynaptic (sender) and postsynaptic (receiver) neuron ends thus forming a 'tripartite synapse'. What is even more exciting is the fact that they are capable of sensing the communication at a synapse and stop a presynaptic neuron from firing, or even trigger the firing of a postsynaptic neuron at their own discretion.

Astrocytic networks are attracting a lot of interest in neuroscience research and are now considered to play a crucial part in neural signal transmission.

Modeling and emulating the functional brain has long been considered one of the most promising approaches to achieve human-level intelligence, or Artificial General Intelligence.

Before taking the plunge into deep learning research, I used to think that computer scientists had to first understand the brain and study about it, in order to come up with novel modeling approaches to apply in machine learning problems.

Something about the brain and the mystery behind it actually resonated with my sense of adventure, and motivated me to get on the bandwagon.

It is true that Deep Learning, in its nascent stages drew much inspiration from the brain but later on, it spun off in its own direction that enabled it to

solve many of the practical real world problems efficiently. In today's deep learning research there's very minimal work that actually builds on ideas

inspired from the brain. In fact, the most about the brain that a majority of the deep learning researchers are aware of is the hierarchical layer wise organization

of the biological neural networks which motivated the early Artificial Neural Network (ANN) architecture. Given the rather sluggish progress made in Neuroscience research thus far, it might be welcoming to

know that advances in deep learning are not necessarily dependent on gaining new knowledge about the brain. However, if you are someone like me with a fascination for understanding the brain, there are a few connections

that you might observe modern deep learning research to be making with ideas from neuroscience. In the rest of this blog post, I would like to discuss about how student teacher networks are being applied in deep learning models and the connections it has in explaining the brain.

Even before Hubel and Wiesel's cat experiment (which proposed the hierarchical layering in biological neural networks, 1960s), and the proposal for backpropagation (the learning algorithm behind ANN's success, 1970s),

the first model of a single neuron was conceived (1950s) by Hodgkin and Huxley. The early neuron model was deeply inspired by everything known at that time about the brain. However, network models of these biologically realistic neurons

fell short in its applicability as a pattern recognition technique, and failed to produce convincing results in very simple problems (solving XOR, for example). It took another couple decades for

researchers to discard the then-prevalent idea of a neuron as a discrete processing unit, and adopt continuous nonlinearities in the model that could frame learning (through backpropagation) in these networks as a tractable problem. This marked a major shift in neural network

research, and led to the inception of ANNs as we know it today. However, a small section of researchers chose to stick to the discrete neuron model so as to capture a realistic mapping of what actually goes on in the brain, and these network models came to be recognized as - 'Artificial Spiking neuron networks' (SNN).

The constraint of having to map the biological circuitry hindered progress in SNN research and it was slow to gain traction over ANN models that offered added flexibility and mathematical ease by eschewing neural dynamics.

This prompted researchers working with SNNs to explore the idea of hypothesizing about the functional brain via theoretical analysis and mathematical modeling

consistent with biological neural systems. In fact, Hodgkin & Huxley's pioneering work on the single neuron model was theorized out of mathematical

assumptions that could explain the results they observed from actual neurons. It is interesting to note that the validity of their model assumptions was established only much later by neuroscientists.

I find it super exciting that research in SNNs started out as being inspired by the brain's structure and information processing mechanisms to design practical network models', and has now come a full circle to help uncover secrets of the brain itself by assuming and theorizing models that explain its dynamics.

In 2016, Larry Abbott a leading theoretical neuroscientist proposed a functional spiking neuron network that could use the 'discontinuous' spiking neurons to learn from 'continuous' stimuli. The neurons in animals are known to communicate in

discrete spikes, much like how we tap a Morse code but in the order of milliseconds, and this sort of discontinuos communication between neurons is somehow able to learn continuously varying stimuli that we see around us, for example - the motion of bodies. To fully grasp the challenges behind the same, think of how predicting a moving agent's future position is

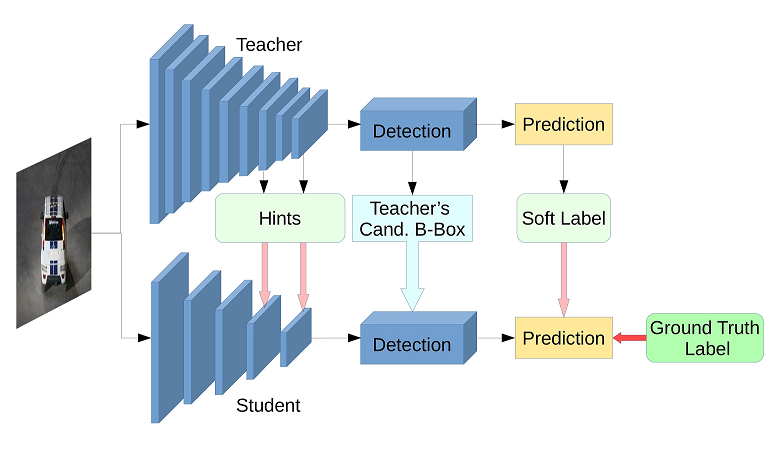

influenced by the knowledge of its fairly recent trajectory. ANNs bypass this problem by using continuous variables, but SNNs achieving this with discrete variables is a major landmark that comes close to explaining neural information processing. In a nutshell, Abbott's idea revolved around the use of a

student-teacher network model, where a student network learns the required input-output mapping through targets it receives from a teacher network that has already learnt to produce the expected output, but from a somewhat transformed version of the input. Thus the problem of learning the student network parameters

is modified into one of identifying the input transformation that causes the teacher to produce the expected output (in a supervised learning setting), and setting appropriate targets for the student to learn the exact input-output mapping. Abbott's model posits that such a student teacher setup might be the explanation behind how we learn new tasks quickly, a concept coomonly referred

to as 'Muscle Memory'. As per the idea, every time we attempt to learn a new task a specialised group of neurons assist a student network until it is trained enough to perform the task autonomously, which sure sounds like a promising explanation to me, but it is best left for the neuroscientists to ascertain.

Now, how much has the ANN research community contributed to explaining the brain? Abbott's idea of SNN models hinged on the student-teacher paradigm which was widely put to use by the deep learning community, primarily as an approach for 'model compression'. Knowledge Distillation, and FitNets were among the pioneering ideas

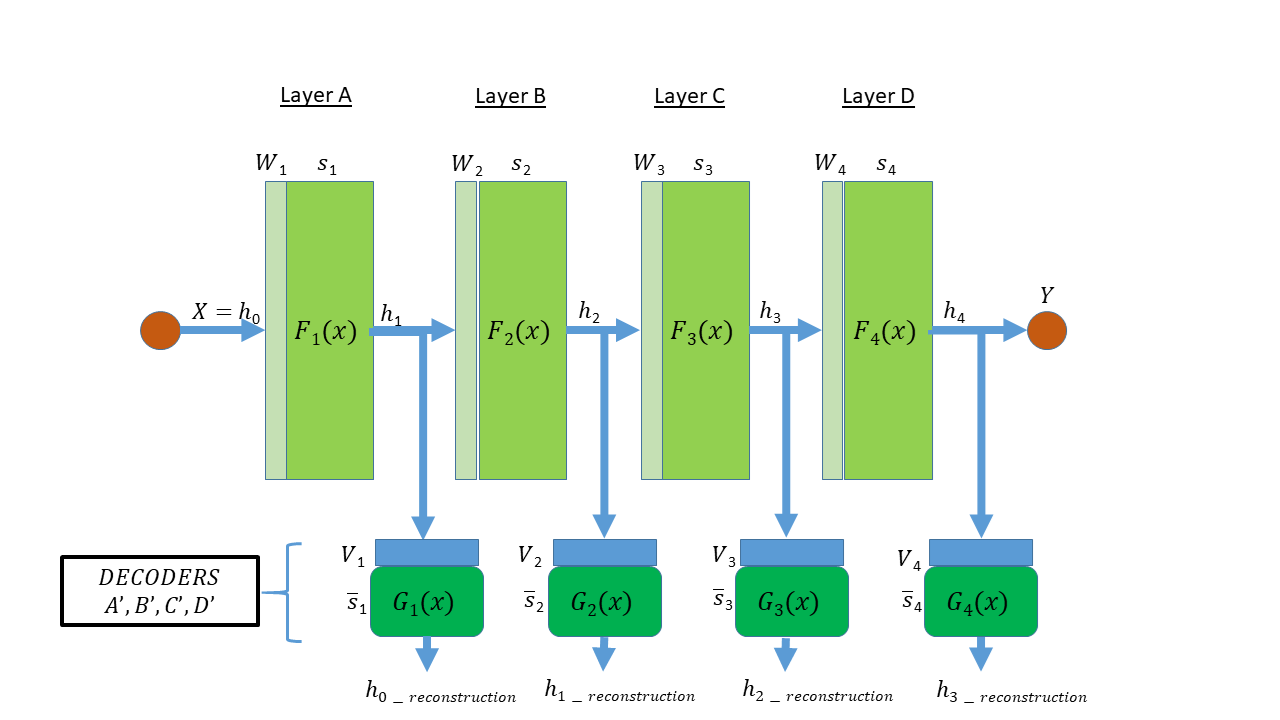

that allowed deep learned models to be successfully deployed in devices with less compute, like mobile phones. The objective of model compression was to teach a wider/deeper network with fewer parameters, from the targets set by a much bulkier network. A much more interesting application of student teacher modelling, and one of my favourites, is the Target

Propagation learning algorithm, which was first put forward by Yann LeCun in 1986 as a method to describe weight updates for each hidden unit of a network. The recent improvisations put forward by Bengio and team, have framed Target propagation as a much more general version of the Backpropagation learning algorithm, that can handle extemely non-linear functions

to the level of discrete mappings. The features generated in the intermediate layers of a student network are reconstructed with regularised autoencoder training, keeping the decoder alone as the teacher network for each layer, and setting these reconstructions as layer-level targets. Since autoencoder training guarantees reconstructions to be of higher likelihoods (than inputs), each layer of

the student network always takes a step in the direction of higher likelihood. Target propagation, despite achieving excellent results in various real-world learning problems, is only sparingly used as Backpropagation continues

to be the go-to choice for researchers and engineers. But nonetheless, the ideas behind Target Prop are what opened the direction of research involving student-teacher networks for learning, like that of Abbott's functional network model which stirred the computational neuroscience research community.

Will Target Propagation or other biologically realistic learning algorithms ever trump Backpropagation and gain wide-scale adoption? Could some form of interconnected teacher networks, like in Neuron-Astrocytic networks be the next step in Target Propagation? Check out the reference section and get started with research in this area!

REFERENCES

STNs in model compression : FitNets and Knowledge Distillation

- Adriana Romero et.al, FitNets : Hints for Thin Deep Nets, ICLR 2015

- Hinton et.al, Distilling the Knowledge in a Neural Network, NIPS '14

Target Propagation : Bengio's approach of using autoencoder reconstructions as targets

- Bengio, How auto-encoders could provide credit assignment in deep networks via target propagation. Tech. rep., arXiv:1407.7906 (2014)

- Dong-Hyun Lee et.al, Difference Target Propagation, in Machine Learning and Knowledge Discovery in Databases, pages 498-515, Springer International Publishing, 2015

Astrocytes : Counts and functions played in Neuron-Astrocytic network

- Rogier Min et. al, The computational power of astrocyte mediated synaptic plasticity, Frontiers in Comput. NeuroScience 2012 [6] von Bartheld et.al, The Search for True Numbers of Neurons and Glial Cells in the Human Brain: A Review of 150 Years of Cell Counting, J. Comp. Neurol. 524, 3865–3895. 10.1002/cne.24040

- Bernardinelli et. al. Astrocytes display complex and localized calcium rto single-neuron stimulation in the hippocampus. J. Neurosci. 2011, 31, 8905–8919.